Bolt3D: Generating 3D Scenes in Seconds

TL;DR: Feed-forward 3D scene generation in 6.25s on a single GPU.

How it works

Given one or more input images, we generate multi-view Splatter Images. To do so, we first generate the scene appearance and geometry using a multi-view diffusion model. Then, Splatter Images are regressed using a Gaussian Head. 3D Gaussians from multiple Splatter Images are combined to form the 3D scene.

Interactive Viewer

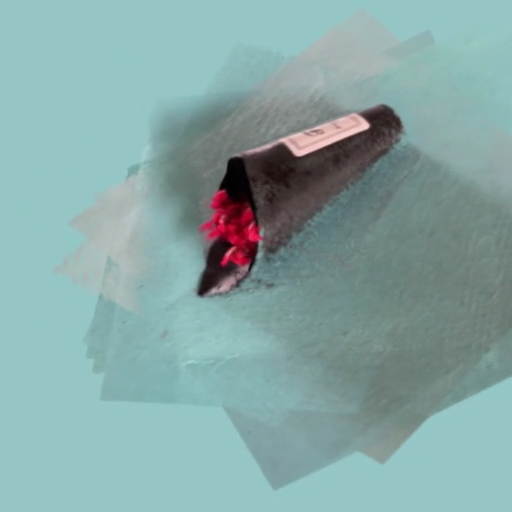

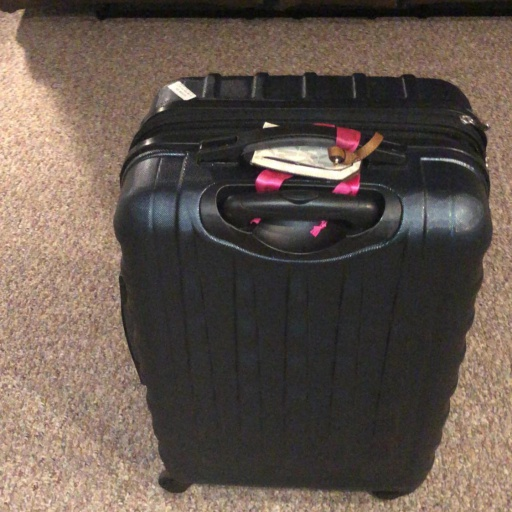

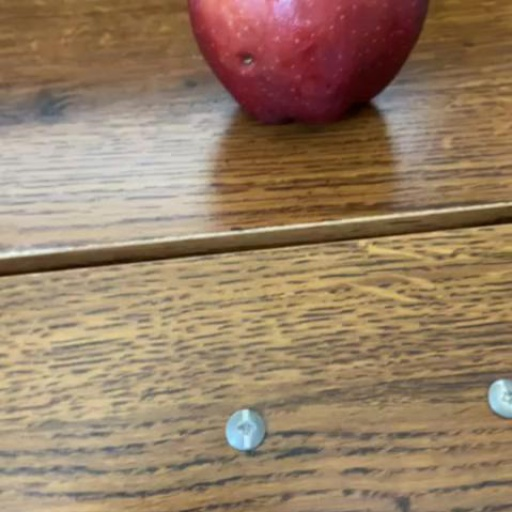

Click on the images below to render 3D scenes in real-time in your browser.

Result Gallery

Variable number of input views

Bolt3D can accept variable number of input images. Our model adheres to conditioning when it is available and generates unobserved scene regions without any reprojection or inpainting mechanisms.

| 1 input view | 1-view reconstruction | 2 input views | 2-view reconstruction |

|---|---|---|---|

Geometry VAE

The key to generating high-quality 3D scenes with a latent diffusion model is our Geometry VAE, capable of compressing pointmaps with high accuracy. We find empirically that our VAE with a transformer decoder is more appropriate for autoencoding pointmaps than a VAE with a convolutional decoder or a VAE pre-trained for autoencoding images. Below we visualize colored point clouds using (1) Pointmaps from data, (2) Pointmaps autoencoded with our VAE, (3) Pointmaps autoencoded with a VAE with a convolutional decoder and (4) Pointmaps autoencoded with a pre-trained Image VAE.

| Data | Our AE | Conv. AE | Image AE |

|---|---|---|---|

Comparison to other methods

Compare the renders of our method Bolt3D (right) with feed-forward and optimization-based methods (left). Our method gives feed-forward 3D reconstruction models generative capabilities, and significantly reduces inference cost compared to optimization-based methods. Try selecting different methods and scenes!

Acknowledgements

We would like to express our deepest gratitude to Ben Poole for helpful suggestions, guidance, and

contributions.

We also thank George Kopanas, Sander Dieleman, Matthew Burruss, Matthew Levine, Peter Hedman,

Songyou Peng, Rundi Wu, Alex

Trevithick, Daniel Duckworth, Hadi Alzayer, David Charatan,

Jiapeng Tang and Akshay Krishnan for valuable discussions and insights.

Finally, we extend our gratitude to Shlomi Fruchter, Kevin Murphy, Mohammad Babaeizadeh, Han Zhang

and

Amir Hertz for training the base text-to-image latent diffusion model.

Website template is borrowed from CAT3D and CAT4D.

BibTeX

@article{szymanowicz2025bolt3d,

title={{Bolt3D: Generating 3D Scenes in Seconds}},

author={Szymanowicz, Stanislaw and Zhang, Jason Y. and Srinivasan, Pratul

and Gao, Ruiqi and Brussee, Arthur and Holynski, Aleksander and

Martin-Brualla, Ricardo and Barron, Jonathan T. and Henzler, Philipp},

journal={International Conference on Computer Vision},

year={2025}

}